How Does Text Encoding Actually Work? (ASCII, Unicode, and UTF-8 Explained)

When you press the letter 'A' on your keyboard, a cascade of silent, invisible events occurs. Within a fraction of a second, your keystroke is converted into a series of electrical pulses, interpreted by your computer, and finally displayed back to you as the clean, familiar shape of the letter 'A'. We take this process for granted thousands of time a day, but it relies on a foundational concept of computing that is both brilliantly simple and profoundly complex: text encoding.

Have you ever copied text from one place to another and seen it turn into a garbled mess of strange symbols, like ’ instead of an apostrophe or • instead of a bullet point? This common frustration, known as "mojibake," is the result of a miscommunication about text encoding. It’s like two people trying to have a conversation, but each is using a different secret codebook.

This guide will demystify the world of text encoding. We will explore how computers, which only understand 1s and 0s, are able to comprehend and represent the vast universe of human language. We'll take a journey through the history and mechanics of the three most important standards you need to know: the humble pioneer, ASCII; the universal library, Unicode; and the dominant encoding of the modern web, UTF-8.

The Fundamental Problem: Teaching a Machine to Read

At its most basic level, a computer is a machine that runs on electricity. It only understands two states: "on" and "off." We represent these two states using binary digits, or "bits": 1 for on, and 0 for off. Every piece of information on a computer—a program, an image, a video, and, most importantly, text—must ultimately be broken down into a long sequence of these 1s and 0s.

The challenge, then, is to create a system that can reliably translate the characters we use (like A, B, C, ?, and !) into a binary format that a computer can store and process. This system has two key parts:

- Character Set: This is essentially a dictionary or a codebook. It is a collection of characters and a unique number assigned to each one. For example, a character set might define that

Ais65,Bis66, and so on. This number is called a "code point." - Character Encoding: This is the specific set of rules that dictates how to convert a character's code point (its number) into a sequence of binary bits. It's the method for writing the number down in a way the computer can understand.

Think of it like this: The character set is the agreement that the symbol for "house" corresponds to the number 10. The encoding is the agreement on how to write the number 10 in binary (1010). Both parts are essential for clear communication.

The Pioneer: ASCII and the Dawn of a Standard

In the early days of computing, different manufacturers had their own proprietary systems for encoding text, leading to chaos. To solve this, a committee developed a universal standard in the 1960s called ASCII, which stands for the American Standard Code for Information Interchange.

How ASCII Works

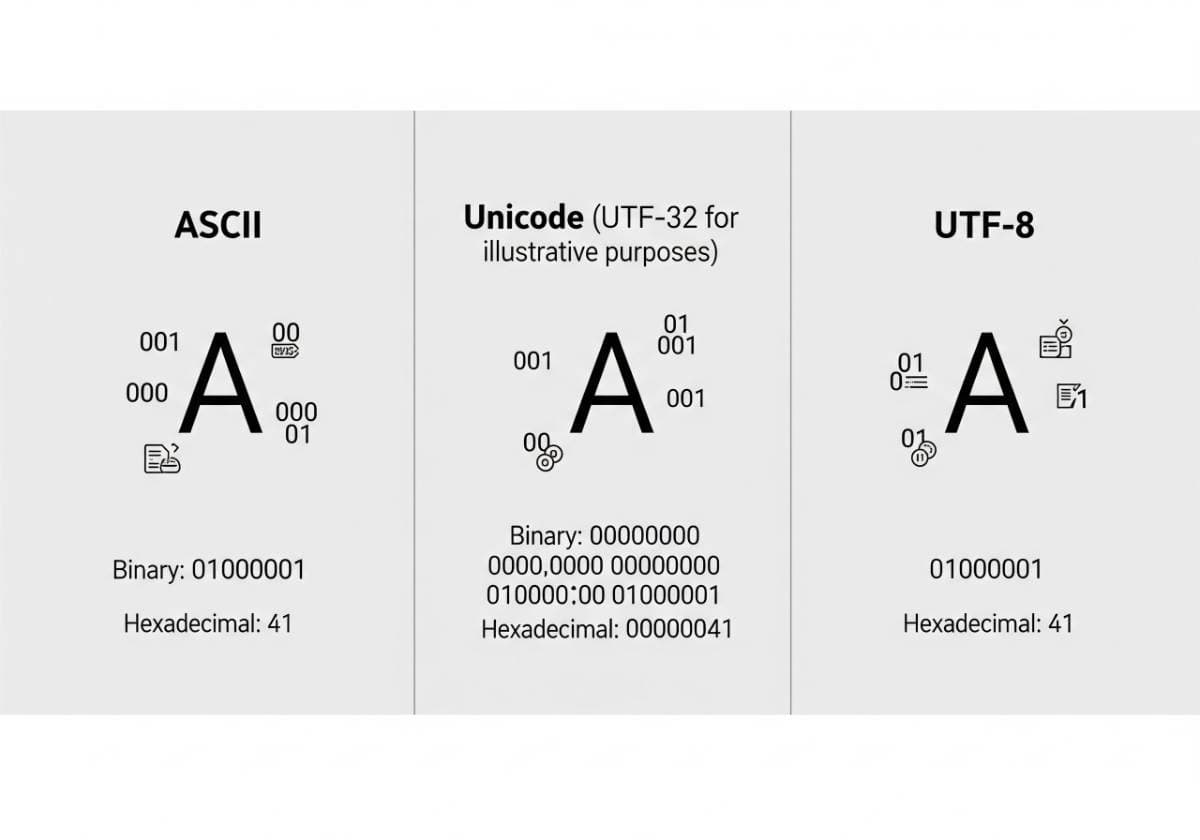

ASCII was designed to be simple and efficient for the technology of its time. It is a 7-bit encoding. This means that each character is represented by a sequence of 7 bits. With 7 bits, you have 2^7, or 128, possible unique combinations of 1s and 0s.

These 128 slots were enough to cover the essentials for American English communication:

- Uppercase English letters: A-Z (Code points 65-90)

- Lowercase English letters: a-z (Code points 97-122)

- Numbers: 0-9 (Code points 48-57)

- Common punctuation and symbols:

!,@,#,$,%,&,*,(,), etc. - Control Characters: Non-printable characters used to control devices, like the newline character (to start a new line) and the tab character.

For example, in ASCII:

- The character

Ais code point65, which is1000001in binary. - The character

Bis code point66, which is1000010in binary.

The Major Limitation of ASCII

ASCII was a huge success and became the foundation of computing for decades. However, it had one massive, built-in limitation: it was designed by Americans, for English. The 128 available slots had no room for characters with accents (like é, ñ, ü), characters from other alphabets (like Greek α, Cyrillic Д, or Hebrew א), or symbols that became important later, like the Euro sign €. The digital world was growing, and ASCII's Anglocentric view was no longer sufficient.

The Global Solution: Unicode, The Universal Library of Characters

As computing became global, the limitations of ASCII led to the creation of hundreds of different, often conflicting, character encodings. A computer in France might use ISO-8859-1 to handle characters like é, while a computer in Russia might use KOI8-R for Cyrillic letters. If you opened an email written in one encoding on a system configured for another, you would get mojibake—the garbled, nonsensical text that became a common frustration of the early internet.

To solve this chaos once and for all, the Unicode standard was created.

What Unicode Is (and Isn't)

It is crucial to understand this key point: Unicode is not an encoding. It is a universal character set. Think of it as a gigantic, unified library or dictionary for every character imaginable. Its ambitious goal is to provide a single, unique number—a code point—for every character from every language, both modern and historical. This includes all alphabets, symbols, punctuation marks, and, famously, emojis.

The standard way to write a Unicode code point is with U+ followed by its hexadecimal number.

AisU+0041éisU+00E9ДisU+0414- The Euro sign

€isU+20AC - The "Pile of Poo" emoji

💩isU+1F4A9

Unicode solves the "dictionary" part of the problem. It provides one universal map from character to number. But it doesn't, by itself, specify how to store those numbers in binary. That is the job of a Unicode encoding, and the most important one by far is UTF-8.

The Dominant Encoding: UTF-8 Explained

With Unicode defining potentially millions of characters, how should they be stored? One simple idea would be to use 4 bytes (32 bits) for every character. This system, called UTF-32, could represent every possible Unicode code point. However, it would be incredibly inefficient. A simple text file written in English would become four times larger than its original ASCII size, wasting a huge amount of storage and bandwidth.

The world needed a smarter solution, and that solution was UTF-8, which stands for Unicode Transformation Format - 8-bit.

The Genius of Variable-Width Encoding

The brilliance of UTF-8 is that it is a variable-width encoding. This means it uses a different number of bytes to represent different characters, making it incredibly efficient.

- For any character in the original ASCII set (A-Z, 0-9, etc.): UTF-8 uses only one byte (8 bits). The binary representation is identical to ASCII.

- For characters with accents and other common symbols (like é, ©, €): UTF-8 uses two bytes.

- For most other major world languages (like Chinese, Japanese, Korean, Cyrillic, Greek, Hebrew, Arabic): UTF-8 uses three bytes.

- For very rare historical scripts, mathematical symbols, and most emojis: UTF-8 uses four bytes.

The Benefits of UTF-8

This variable-width approach gave UTF-8 two killer features that led to its global domination.

Backward Compatibility with ASCII

This was the masterstroke. Because UTF-8 uses the exact same binary representation for the first 128 characters as ASCII, any text file that was pure ASCII is also a valid UTF-8 file. This meant that the entire existing ecosystem of English-language text on the internet didn't need to be converted. Systems could adopt UTF-8 without breaking any old content, making the transition seamless.

Space Efficiency

UTF-8 doesn't waste space. An email written in English takes up the same amount of space as it did in 1980, but that same email can now also contain a Japanese name, a Greek quote, and an emoji without any issues. This efficiency is why UTF-8 is now the standard for the web, used by over 98% of all websites.

A Quick Look at Other Encodings: UTF-16 and UTF-32

While UTF-8 rules the web, you might encounter other Unicode encodings.

- UTF-16: This is also a variable-width encoding, but its basic unit is 2 bytes (16 bits). It uses 2 bytes for most common modern characters (including many Asian languages) and 4 bytes for rarer characters and emojis. It's used internally by operating systems like Windows and programming environments like Java, but it's less common for web pages because it lacks that perfect backward compatibility with ASCII.

- UTF-32: This is a fixed-width encoding. It uses 4 bytes for every single character. This makes it very simple to work with in programming (as every character is the same size), but it is very wasteful in terms of storage space.

Practical Implications: Why This Matters to You

Understanding this distinction between Unicode and UTF-8 has real-world consequences.

Setting the Correct Encoding in HTML

When a web browser loads a webpage, it needs to know which "codebook" to use. You tell it by including a simple meta tag in the <head> section of your HTML document:

<meta charset="UTF-8">

This single line instructs the browser to interpret the file using the UTF-8 encoding rules, ensuring that all characters, from any language, are displayed correctly.

Saving Your Text Files Correctly

If you are a developer or work with text files, your text editor (like VS Code, Notepad++, or Sublime Text) allows you to choose the encoding when you save a file. Unless you have a very specific reason not to, you should always choose "UTF-8". This will ensure your file is compatible with almost any system in the world.

Storing Data in Databases

Databases also need to know how to handle text. When setting up a database (like MySQL), choosing the right character set and collation is crucial. Modern standards like utf8mb4 are essential for ensuring that your application can store any text your users might enter, including international characters and emojis.

Conclusion: The Silent Language of Computers

The journey from a simple keystroke to a character on your screen is a story of elegant problem-solving. ASCII was the clever but limited first draft. Unicode was the ambitious, global library that catalogued all of human language. And UTF-8 was the ingenious, efficient implementation that allowed this universal library to become the practical standard for our interconnected world.

Understanding text encoding is understanding the fundamental bridge between the richness of human language and the simple binary logic of a computer. The next time you see a webpage that perfectly blends English, Japanese, and emojis, you can appreciate the silent, beautiful work of Unicode and its most valuable player, UTF-8.

Comments (0)

Leave a Comment

Your feedback and thoughts are welcome.

Be the first to comment!